Alugha Updates | March 2022 - what's new at alugha

Here at alugha, we love technology and leveraging it in creative ways for our users to provide unique features and a stellar experience.

The evolution of our encoding process has taken a long time. But all that time has paid off.

Read this article in: Deutsch, English, Español, Português, Српски, العربية, 中文

Estimated reading time:5minutesWhen alugha started, we had a server and it did its job. Here and there a video was encoded, but at some point we reached the point where we were experiencing initial performance problems and so we outsourced the development environment. More and more functions and videos were added. From 1-2 short videos a week to 1-2 a day. From 3-5 minutes per video to 20 minutes or even whole feature films. After having performance problems again and again, we had to think of something. We needed a strategy that was worthwhile. Right from the start we wanted to constantly optimize our product and make our users happy with it.

So what did we do? We took a very critical look at everything we had built in 2016 and found that we could not continue like this until the next big influx. Something completely new was needed here.

Let's take a look at the individual areas that we implemented or optimized from scratch:

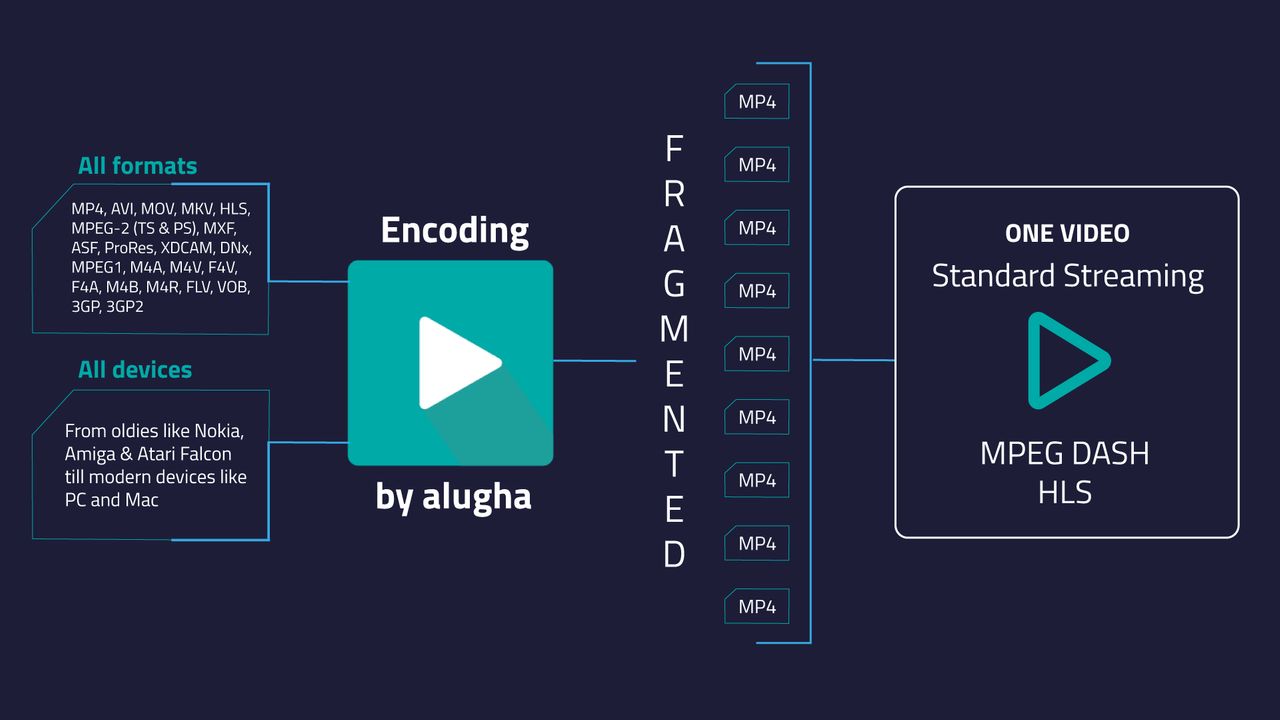

The amount of recording devices you can use to record video seems endless. There are people who still work with the Amiga or Atari Falcon. Some have an older mobile phone, others a state-of-the-art iPhone. Then there are those who created their first archive many years ago and worked with DivX, XviD or MPEG1. We could continue this list to the point where we could easily get to 150 or more video formats. This is a big challenge, because while the eager filmmaker can watch his video on his device, it doesn't mean that a video platform (in this case ours) can do anything with it. So we have to make sure that we can handle as many formats as possible. In general, we cover a lot of popular formats, such as MP4, AVI, MOV, MKV, HLS, MPEG-2 (TS & PS), MXF, ASF, ProRes, XDCAM, DNx, MPEG1, M4A, M4V, F4V, F4A, M4B, M4R, FLV, VOB, 3GP, 3GP2, to name a few... Very rarely we can't process a video in a way that it makes the step to encoding

Imagine if you had an old Nokia you could film with... Oh yes, it's been a long time since the good old devices had a week of battery power. I don't have such a device and so it is quite unlikely that I can watch the video if it is online in the format provided by Nokia. This is one of the reasons why we need to encode the video.

The world could be that simple, but it isn't, because every manufacturer follows his own ideas. Over time, few formats have clearly established themselves. The best known and most used standards for adaptive streaming today are MPEG DASH and HLS. While we used HLS MPEG TS before, we now encode to a "fragmented MP4".

This is gonna be cool: We love to constantly improve things and work as green as possible. Thanks to the fragmented MP4 we can now use HLS and MPEG DASH in the same video file. This allows us to ensure that both formats (and thus access to a very large number of devices) are available simultaneously and immediately after encoding. And not only that! We have been able to reduce the size of the videos by up to 60%, dramatically reducing the unnecessary use of storage space. The best thing about it: The quality is not affected!

Yeah, that' s the thing... How fast can you actually encode such a video? Until this update, high-performance encoding was not seriously available in our company. We transferred 5 videos into the pipeline at the same time and then processed them one after the other. Since it took place on our standard servers, which we had not optimized in any way, it could happen that you had to wait 3-5h until the last video was ready. This was especially dependent on which videos were in the pipeline.

After the update, we will be able to encode thousands and more of entire movies in HD quality simultaneously! We check the computer clusters that we have for this worldwide and decide where the most power is available at the moment and move the video encrypted there, encode it and bring it back to the object storage, where it is available for the user again. Here we have an encoding rate of about 10 : 1, so a feature film in 1080p (FHD), with a length of 90 minutes is available in less than 10 minutes in all formats and resolutions that we offer and can be published immediately. We also query the status of the process several times and can provide exact information on the encoding status of a video.

Security is a major issue for us. Many of our users use alugha especially in combination with the dubbr and for internal training videos or for (feature) film projects and series. So when a video is uploaded to alugha, these same users often want to make absolutely sure that nobody has access to it. Especially not if we move the data back and forth between the encoding boxes. Therefore, the videos are encrypted on both sides to ensure secure "transport".

Encoding was one of our big construction sites and we deliberately took our time. Over the years we have gained a lot of experience and have been able to incorporate this into the planning. We have spent the last 12 months dealing with the topic on a massive scale, and during the implementation we have also corrected many small errors and closed gaps. This is the gate to a completely new league for us and was one of the most important building blocks to offer our customers a much better service. But... after the match is before the match! We still have a lot on the roadmap for this topic!

Here at alugha, we love technology and leveraging it in creative ways for our users to provide unique features and a stellar experience.

Here at alugha, we love technology and leveraging it in creative ways for our users to provide unique features and a stellar experience.

“Management is the art of orchestrating best possible collaboration in an organization.” Where this “art” (for me) combines both, the willingness and the ability to act. Both have to be reflected in the two main areas of management: in the function “management” (the “how” and “what”) and the instit

Alugha is a video translation tool that streamlines the production and collaboration process for high-quality content tailored to international audiences. Learn more at: https://appsumo.8odi.net/get-the-starter-pack You’re ready to share your videos with the whole wide world. But like a certain co

IZO™ Cloud Command provides the single-pane-of-glass for all the underlying IT resources (On-premise systems, Private Cloud, Cloud Storage, Disaster Recovery, Amazon Web Services, Microsoft Azure, Google Cloud Platform, etc). About Tata Communications: Welcome to Tata Communications, a digital ecos

A revolutionary new service in the video industry! Our report is about the unique alugha platform. Alugha gives you the tools to make your videos multilingual and provide them in the language of your viewers. Learn more about the great features of the platform here: https://alugha.com/?mtm_campaign