Alugha Updates | March 2022 - what's new at alugha

Here at alugha, we love technology and leveraging it in creative ways for our users to provide unique features and a stellar experience.

Why do you understand what you're reading? Because there are many networks that work in your brain at the same time to process the meaning of words or grammar.

Read this article in: Deutsch, English, Español, Hrvatski, Српски

Estimated reading time:7minutesI'm sitting in front of a dark window. There's a rhythmic sound in the room. Anxiously my eyes wander from machine to machine. In the air I can see the flickering of the screens around me. A loudspeaker voice breaks through the tense atmosphere: "Then the Baron's driver calls." I take a look at the monitor in front of me. Everything is normal. Through the thick glass I can see the soles of Mr. M.'s feet. His body disappears in a huge white tube.

What is going on in the basement of an East German university hospital is a measurement with magnetic resonance imaging (MRI). The MRI tube is my gateway to the brain. As a brain researcher, I want to use it to get to the bottom of the mystery of language. Mr. M. is helping me with this.

We constantly use language. We talk on the phone, read or watch TV, without even thinking about it. Nonetheless, language is quite complicated. Just think about children who still must learn it. Or how difficult it is to learn a foreign language with all its grammar, millions of words and pronunciation. We native speakers, on the other hand, produce over 16,000 words a day as if by magic. Or write 145 minutes of text messages per day - at least young women. How does our brain manage?

While speaking our brain controls around 100 muscles, not only the tongue and our larynx, but also our lips, palate, throat, epiglottis and lungs. Teeth and nasal cavity are also important for articulation. Using all of this, we can produce up to 180 words or 500 syllables per minute.

This is only the motoric part of speech production. In fact, your brain does much more when you speak. Or hearing speech: The ears pick up the speaker's sound waves and pass them on to the hair cells of the inner ear. From there, the acoustic signal goes forward to your auditory cortex, where it is analyzed according to spatial and temporal characteristics. To understand what we've heard, your brain now needs to compare this information with the words, grammar rules, sentence structures and meanings that it has saved.

Now it's on to the answer. Its planning - the conceptualization - is pre-linguistic, it's all about the content. Grammar and word forms, which are metric, phonological and syllabic, are used in the formulation, then translated into motoric work instructions and passed on to the organs of articulation.. All this happens partly serially, partly parallel or overlapping in time. And all this in a matter of milliseconds - a performance that no super-computer is capable of yet.

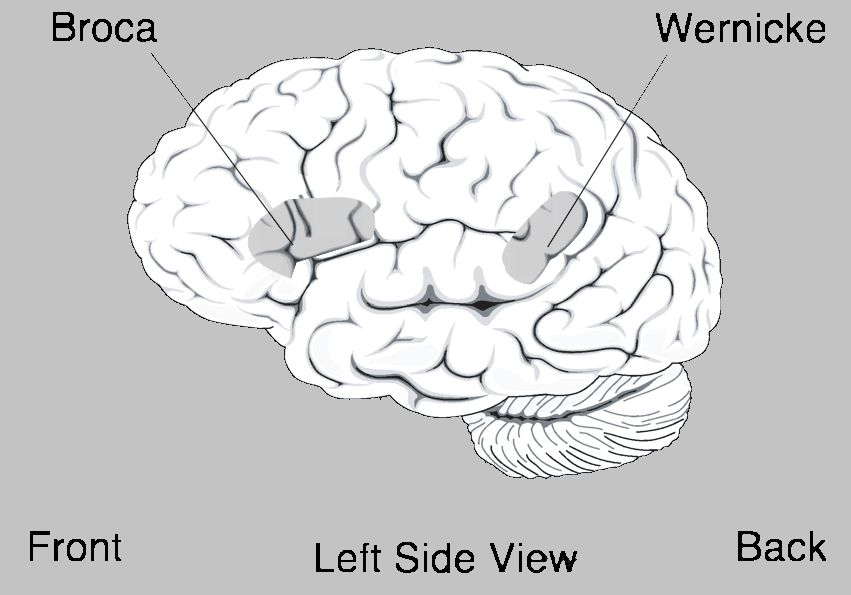

For those so-called higher cognitive functions, we use a large part of our cerebral cortex and numerous fiber bundles, also called fasciculi, that connect the necessary parts of the brain. However, the general doctrine has been another for a long time: there are two speech areas, the Broca area in the frontal lobe responsible for speech and the Wernicke area in the temporal lobe responsible for the understanding of speech. The introduction of the MRI destroyed this model, because scientists now where able to visualize the processes in the brain. This has been a huge step. Until then we had only been able to make assumptions about the processes based on patients that had misfunctions - and after examining their brains after their deaths.

Mr M. is doing well. He's lying quietly, has his eyes closed and is breathing calmly, concentrated on what he's hearing on the speakers: "Then the father kisses the boy." - "Did the father kiss the boy?" Mr. M. thinks about it and presses the button for "yes".

The answer is correct, Mr. M. decoded the sentence structure correctly. A few calculations later the MRI pictures show which parts of the brain he used for this conclusion. They confirm the research of the past years: there are more than two speech areas in the dominant brain hemisphere; moreover, a whole group of areas decodes the structure of difficult sentences, another the structure of easy sentences and a third the meaning of the words. This happens no matter if we speak or are listening. These regions contain the Broca and Wernicke areas, as well as the front of the temporal lobe, many parts of the frontal lobe (inferior, anterior and posterior) and the lower parietal lobe. Solely, the occipital lobe, responsible for seeing, isn't involved in the processing of speech in general.

BILD https://de.wikipedia.org/wiki/Sprachzentrum

It is not only exciting to know which brain regions are involved in language, but unfortunately it is also becoming increasingly important: by 2050 the number of strokes in our society will double. Many of them are accompanied by language problems, and the more we know about the anatomy of language, the better we can help. For example, patients with brain tumors in which speech-processing regions are damaged by a tumor, such as Mr. M. whose tumor is located in the upper left parietal lobe of his brain, right where his grey hair thinning. On screen it stands out dark and walnut-sized from the rest of the brain. You can see something else: Water around the tumor that doesn't belong there, a so-called edema. The water isn't located in the gray but in the white substance.

We know a lot about gray substance. It consists of nerve cells, is predominantly located on the outside of the brain and forms the cerebral cortex. You can attribute some functions to this area: seeing, hearing, motor skills, also speech. The so-called "white substance" is located inside the brain. It consists of the extensions of the nerve cells, so-called fibers, which are bundled and thus run through the entire brain - kind of like subway lines that connect the suburbs of a big city with each other. They transmit information between the gray substance regions, so that they can communicate with each other. However, we not yet know which fiber bundle transmits which information. But I'm trying to find this out regarding our speech.

Mr. M. is helping me with this, because his edema in the white substance of his brain paralyzes some of the fiber bundles. Giving him speaking tasks, I can learn things about the functions of these bundles: they are probably responsible for the tasks he is having trouble to complete.

Getting out of the MRI Mr. M. is a little shivery. His hands are shaking. I'm helping him into his shoes and handing him a glass of water. Sweat on his forehead shows that the test was exhausting for him. "Please fill in this sheet, after drinking your water", I'm saying cheerfully. But Mr. M. takes up the pen immediately. I realize my mistake: my sentence structure was too complex. Mr. M. has problems understanding the structure of complex sentences.

Five weeks later, at his next MRI , he is already much better. Surgeons removed his tumor and the unwanted water in his brain was eliminated through medication. Mr. M. improved in processing complex sentence structures. The fiber bundles that had been paralyzed by the edema are working again. This supports my thesis: the fasciculus arcuatus and the fasciculus superioris longitudinalis in the dominant brain hemisphere are involved in the process of decoding complex sentence structures. Further studies must now confirm this thesis.

For now, I'm happy with my results: our brain consists of specialized networks of gray and white substance that are different in their anatomy and have their own functions. For example, there is one network responsible to decode difficult sentences and one that is responsible for single words. The first is situated mainly in the left hemisphere, whilst the second is located in both parts of the brain. To understand or speak a sentence, our brain uses more than one network at the same time. In a sense, it's kind of like an orchestra: the musicians of an instrument group, e. g. the first violinists, listen to each other and coordinate with each other while producing a sound. And when the sounds of all instrument groups are played at the right time, a concert is created.

Sarah Gierhan is the project manager for science at the Else Kröner-Fresenius-Foundation. Before she managed the Internet portal dasGehirn.info at the non-profit Hertie-Foundation and was a consultant at McKinsey & Company. She earned her doctorate in neuropsychology via the language network in the brain and worked for many years as a music teacher. As a generalist, she loves to explore new issues, see things from a different perspective and mentor other people with her many-sided experience.

This article has originally been published on www.dasgehirn.info.

#alugha

#doitmultilingual

#everyoneslanguage

Si durante los nueve meses de gestación de un bebé humano se somete a una terapia de lenguaje a base de sonidos, ruido , explicaciones verbales relacionadas con el manejo del idioma y música ,a la madre y a su cría ,habrá posibilidades de que al salir de su hábitat esté con adelantos positivos en relación a la manipulación tanto del lenguaje oral como corporal. Quizás repitiendo el proceso con las madres salidas de dicho proceso y así sucesivamente se estimùle al nuevo crío a desarrollar prematuramente la utilización del lenguaje incluyendo el que corresponde a los sentidos conocidos en el hombre hasta un punto que el organismo desarrolle un gen especial que puedan heredar todos los humanos puesto que el descubrimiento de nuevos hábitats y la habitabilidad del espacio exterior así nos lo va a requerir en el proceso acelerado de adaptación a éstas nuevas conquistas de la humanidad. Porque el cerebro ve con su imaginación en cualquier lugar en que se halle, y la imaginación es el medio más universal de conocimiento en cualquier núcleo humano....

Here at alugha, we love technology and leveraging it in creative ways for our users to provide unique features and a stellar experience.

Here at alugha, we love technology and leveraging it in creative ways for our users to provide unique features and a stellar experience.

“Management is the art of orchestrating best possible collaboration in an organization.” Where this “art” (for me) combines both, the willingness and the ability to act. Both have to be reflected in the two main areas of management: in the function “management” (the “how” and “what”) and the instit

Alugha is a video translation tool that streamlines the production and collaboration process for high-quality content tailored to international audiences. Learn more at: https://appsumo.8odi.net/get-the-starter-pack You’re ready to share your videos with the whole wide world. But like a certain co

IZO™ Cloud Command provides the single-pane-of-glass for all the underlying IT resources (On-premise systems, Private Cloud, Cloud Storage, Disaster Recovery, Amazon Web Services, Microsoft Azure, Google Cloud Platform, etc). About Tata Communications: Welcome to Tata Communications, a digital ecos

A revolutionary new service in the video industry! Our report is about the unique alugha platform. Alugha gives you the tools to make your videos multilingual and provide them in the language of your viewers. Learn more about the great features of the platform here: https://alugha.com/?mtm_campaign